Bayer Sensor Technology

By Bokeh Rentals | July 9th, 2021Bayer Sensor Technology

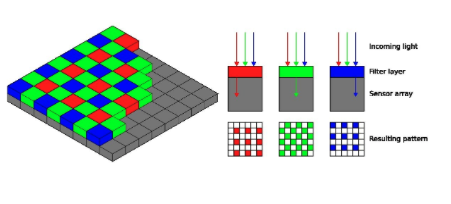

One of the greatest things that could happen to digital cinema is if color or wavelength of light could be recorded directly. Sensors would gain two to three stops of dynamic range, around 40% more detail, and much more accurate color rendition. Sadly, photo-sites are colorblind; they only record amount of light, not type of light. Therefore, camera systems have had to use some workarounds, each with their own complex engineering and flaws, to record color. Film stocks used layers of dyes, and early digital camera systems used prisms to separate wavelengths of light. Now, we are working in the era of the CFA, or color filter array. This is a filter applied over the top of the sensor with a specific pattern of red, green, and blue filters to separate light hitting each photosite.

The Bayer filter array is the most commonly used CFA. The pattern is shown in the image below: each green photosite is surrounded by two red and two blue photosites in alternating red-green and green-blue rows. The sensor is divided into 50% green, 25% red, and 25% blue photosites. Each photo-site is sensitive to only one color, and in the majority of camera systems, each photo-site corresponds to one pixel in the final image.

This confused me for a long time. How do we get the millions of colors we see in films? It seems that to get one accurate color pixel in the final image, you would need one green, one red, and one blue photosite on the sensor. It turns out that cameras are a lot smarter than that. Complex algorithms work to determine a pixel’s color based on the amplitudes of the pixels around it, and it does this millions of times for each frame. This process is called demosaicing, through which the color of each pixel is interpolated by those around it. This math accounts for a lot of the differences between different camera systems and the images they produce.

Part of the reason Bayer sensors work is because our eyes are much more sensitive to changes in brightness than to changes in color. Therefore, even though color detail is reduced by the CFA, it does not ruin the image’s apparent resolution. Look at the image above: what immediately catches your eye is the contrast between the near-white fire and the shadows in the forest and water: changes in exposure, not in color.

The reason there are twice as many green pixels as red and blue is because human eyes are most sensitive to green light, and thus exposure is primarily dependent on our perception of green wavelengths.

The downside to this way of determining color is it loses a lot of light. A lot. About 1/3…” of incoming light is rejected by the sensor’s CFA.

Another problem is the detail of the image is severely reduced by the filter array. Even if the demosaicing algorithm is extremely precise, there is still guessing involved, which will blur the final image and cause artifacts. This can be solved by shooting at a higher resolution than the deliverable format, and scaling down in post-production.